Animate Static Portraits with Live Portrait

Transform static images into lifelike animations with Live Portrait. Create realistic facial expressions, smooth head movements, and precise lip synchronization from a single photo. Experience high-quality, efficient portrait animation with unparalleled control and versatility.

Start for FreeLive Portrait Key Features

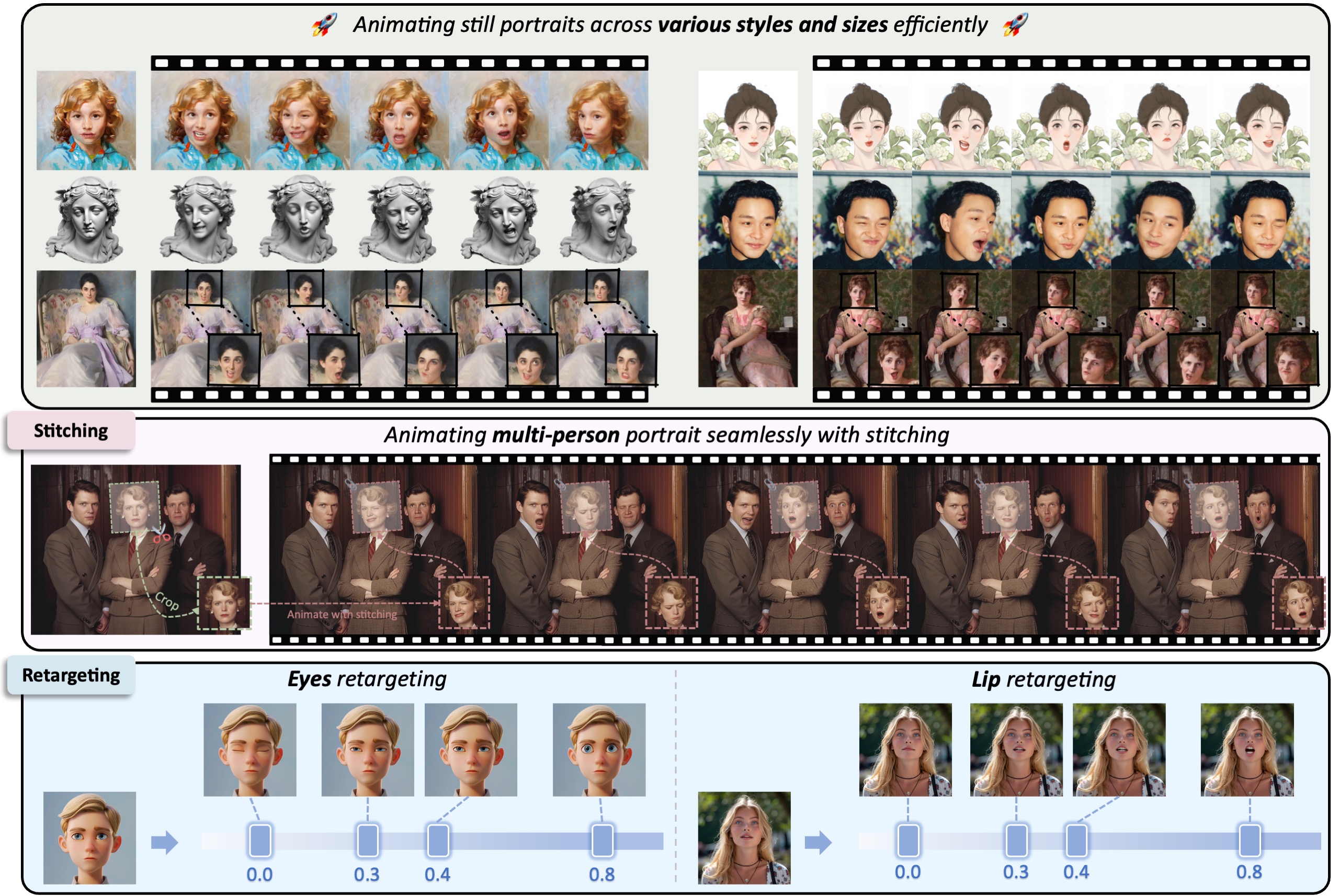

Multi-Style Portrait Animation

Live Portrait animates still images across various styles and sizes using stitching technology.

Portrait Video Editing with Stitching

Live Portrait offers dynamic video-to-video face animation and editing capabilities using stitching technology.

Precise Eye Animation Control

Live Portrait dynamically adjusts eye openness using scalar inputs, enabling nuanced expression control.

Accurate Lip Movement Control

Live Portrait fine-tunes lip positions with scalar-based inputs, allowing for precise speech and expression animation.

Advanced Self-reenactment

Live Portrait generates high-quality animations from a single source frame, outperforming existing methods in recreating dynamic video sequences.

Powerful Cross-reenactment

Live Portrait accurately transfers motion between different portraits, handling diverse scenarios from large pose changes to subtle expressions and multi-person inputs.

How Live Portrait Works

A bird's-eye view of this cutting-edge AI technology

Feature Extraction

Built upon the vid2vid model and trained on a vast dataset of high-quality images and videos, this technology extracts key features from the source image and driving video.

Implicit Keypoint Animation

An innovative implicit keypoint framework maps motion from the driving video onto the portrait, ensuring efficient and controllable animation.

High Efficiency Video Synthesis

An optimized decoder rapidly generates each frame, creating smooth animations efficiently while maintaining the source image's identity.

Precision Control and Enhancement

Stitching and retargeting modules refine the output, allowing for fine-grained control over facial expressions and movements with minimal computational overhead.

How to Use Live Portrait

Transform your static photo into a lifelike animation in just three simple steps.

Upload Portrait Image

Upload a portrait photo with a clear face. Live Portrait will extract facial features from this image for animation purposes.

Select or Upload Driving Video

Choose or upload a driving video showing facial movements. This video will guide the facial motion generation for your portrait image.

Generate Animation

Click 'Animate', adjust configuration parameters if desired or keep the defaults, then click to generate and wait for the process to complete.

Live Portrait FAQs

Find answers to common questions about Live Portrait, its features, and usage.

1. What is Live Portrait?

Live Portrait is a video-driven portrait animation framework that focuses on better generalization, controllability, and efficiency for practical usage. It's designed to synthesize lifelike videos from a single source image.

2. What are the main features of Live Portrait?

Live Portrait's main features include generating realistic videos using a single source image as an appearance reference, with motion (facial expressions and head pose) derived from driving videos, audio, text, or generation. It supports self-reenactment, cross-reenactment, eye and lip retargeting control, and various styles (realistic, oil painting, sculpture, 3D rendering).

3. Who developed Live Portrait?

Live Portrait was developed by researchers from Kuaishou Technology, University of Science and Technology of China, and Fudan University.

4. What core technologies does Live Portrait use?

LivePortrait explores and extends the potential of the implicit-keypoint-based framework, balancing computational efficiency and controllability. It uses a mixed image-video training strategy, upgraded network architecture, and improved motion transformation and optimization objectives. It also incorporates stitching and retargeting modules using small MLPs for enhanced control.

5. What are the application scenarios for Live Portrait?

Live Portrait can be used for various portrait animation scenarios, including self-reenactment, cross-reenactment, portrait animation from still images, portrait video editing, eye and lip retargeting control, and animation across different styles. It can also be fine-tuned for animal animation (cats, dogs, pandas).

6. What is the performance of Live Portrait?

Live Portrait achieves a generation speed of 12.8ms on an RTX 4090 GPU using PyTorch. The project used about 69 million high-quality frames for training to enhance generation quality and generalization ability.

7. How can I get more information about the Live Portrait open-source project?

You can find the inference code and models at https://github.com/KwaiVGI/LivePortrait. More detailed information and experimental results are available on the project's GitHub page.

8. How can I start using Live Portrait for free?

To start using Live Portrait for free, visit the free playground at https://live-portrait.org/free-playground.